Apple has revealed a groundbreaking method to train its Apple Intelligence platform by analyzing user data on devices while maintaining strict privacy standards. Announced on April 14, 2025, through a research post, the company is using differential privacy to gather insights into user behavior without accessing individual data. This approach aims to improve AI features like email summarization and text generation, but it has also raised questions about privacy and trust in the tech industry.

The move is part of Apple’s broader strategy to enhance its AI capabilities while staying true to its privacy-first ethos. With competitors like OpenAI and Google advancing rapidly in AI, Apple is under pressure to deliver more effective models, and this new method could be a game-changer for features that rely on understanding real-world user interactions.

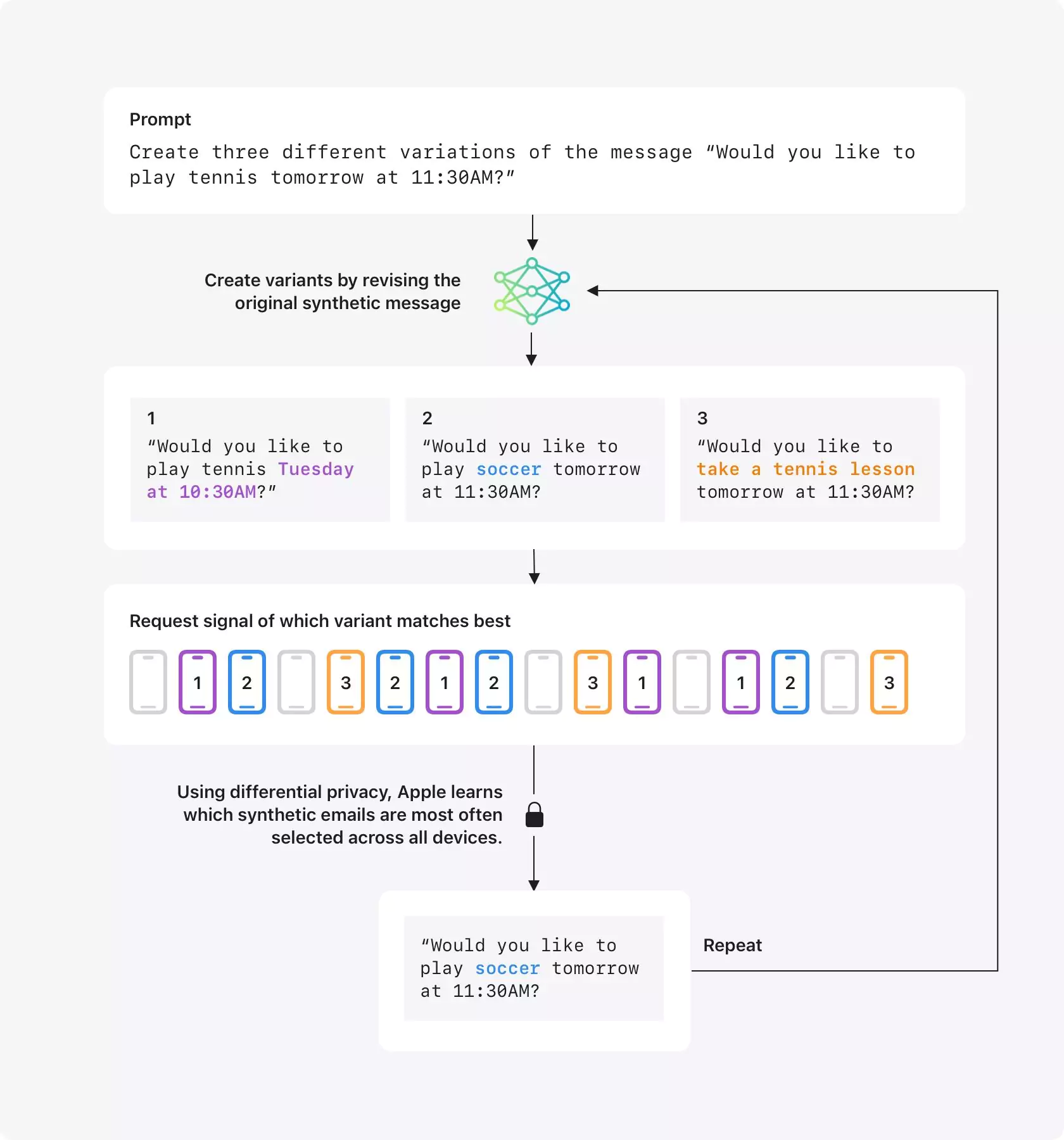

Apple’s technique involves generating synthetic data that reflects aggregate trends in user behavior. The process starts with creating synthetic messages on various topics, such as “Would you like to play tennis tomorrow at 11:30 AM?” These messages are turned into embeddings—numerical representations capturing elements like language, topic, and length. Devices that have opted into Device Analytics then compare these embeddings to a sample of recent user emails, selecting the closest match. Using differential privacy, Apple ensures that it only learns which synthetic embeddings are most frequently selected across all devices, without identifying which embedding any specific device chose. This data is then used to train models for features like email summaries, writing tools, and Genmoji, with plans to expand to Image Playground, Image Wand, Memories Creation, and Visual Intelligence in future updates.

A key aspect of this method is that no user data leaves the device. Only a signal indicating the most similar synthetic embedding is sent to Apple, and the system adds noise to the data to further protect privacy. This builds on Apple’s long-standing use of differential privacy in its opt-in Device Analytics program, which has helped the company gain insights into product usage while safeguarding user information. Apple also ensures that it does not use private user data or interactions to train its foundation models, and it filters out personally identifiable information like Social Security numbers from publicly available internet content.

The need for this approach stems from the limitations of synthetic data. While synthetic data can mimic user behavior, it often fails to capture the complexity of real interactions, especially for tasks like summarizing long emails or generating text. By analyzing user data on-device, Apple can create more accurate synthetic datasets, which in turn improve the performance of Apple Intelligence features. However, the process requires multiple rounds of curation to achieve optimal results, such as generating variations of messages by replacing “tennis” with “soccer” to cover a broader range of scenarios.

The announcement has generated both excitement and skepticism. A report by Bloomberg noted that this method is a strategic effort to keep pace with AI competitors, but it may also concern users who are wary of any data usage, even with privacy protections. Critics, as highlighted by AppleInsider, have pointed out that differential privacy, while robust, is not infallible and could potentially allow for re-identification in rare cases. Additionally, since participation in Device Analytics is opt-in, the data may not fully represent Apple’s diverse user base, which could affect the training process.

Apple’s timing is notable, as the company recently delayed the rollout of advanced Siri features, raising questions about its ability to compete in the AI race. By adopting on-device data analysis, Apple aims to improve its models without compromising its privacy principles, which have long set it apart from competitors. The company’s approach could also influence the broader tech industry, as noted by NewsBytes, potentially encouraging other firms to adopt similar privacy-preserving techniques for AI training.

The debate over this technology highlights a larger tension in the tech world: balancing innovation with user trust. Apple’s use of differential privacy may set a new standard for privacy in AI, but its success will depend on how well it addresses user concerns and delivers tangible improvements in Apple Intelligence. As this method rolls out, it will be crucial to monitor its impact on both AI performance and user sentiment. What do you think about Apple’s new AI training approach? Share your thoughts below, and explore more tech updates at technocodex.com.