Instagram has introduced a transformative AI system to detect and safeguard teen users, a major update unveiled in 2025 by Meta. Dubbed the “adult classifier,” this technology identifies users under 18 using profile data, engagement patterns, and birthday mentions, enforcing stricter safety settings with 98% accuracy. Part of a global push to protect younger audiences, it’s reshaping how social media platforms operate—but it’s also raising concerns about data privacy and user autonomy.

This isn’t just a platform tweak—it’s a global shift with potential to influence digital safety standards. How will AI balance protection and privacy? Let’s break it down.

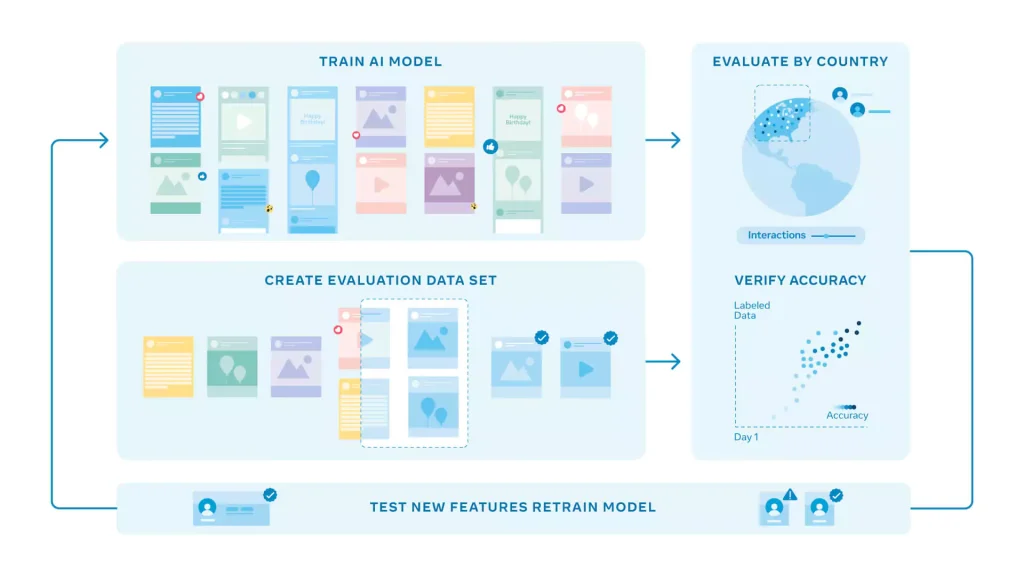

The move stems from years of scrutiny over teen safety, with Meta investing heavily in AI solutions. The “adult classifier” scans signals like friend interactions and content preferences, as detailed in a Herald Scotland report on age verification. First piloted in 2022, it now automatically sets teen accounts to private, limits adult messaging, and filters harmful content, addressing calls from regulators and parents.

The AI processes millions of data points daily. It uses machine learning to estimate ages with 98% accuracy for users under 25, per a Verge analysis of AI settings. When a teen is flagged, safeguards kick in: restricted direct messages, curated feeds, and parental oversight options. This real-time protection aims to shield over 50 million teen users globally, but the data intensity prompts privacy debates.

The upside is clear. The 98% accuracy could reduce exposure to predators and mental health risks, a focus of an AP news piece on teen protection. Parents gain tools to monitor activity, while Meta hopes safer platforms boost user retention. For advertisers, it could mean a more trusted environment, potentially increasing engagement.

But challenges emerge. Biased data—perhaps from skewed regional inputs—could misidentify users, locking adults out or missing teens. Privacy concerns grow with video selfies for verification, noted in a Fast Company article on AI trials. Users can challenge decisions, but unclear data policies leave gaps. Cultural variations, like age definitions, might also trip up the system.

Public sentiment varies. X posts show teens feeling restricted, with one writing, “AI controlling my account is too much.” Parents, however, welcome it, calling it a “safety win.” This divide, absent from Meta’s announcements, could push for user input in future updates, like customizable settings.

Long-term questions persist. Will AI adapt to evolving teen trends or new threats like AI-generated content? Meta’s year-long evaluation will test this, but the lack of a decade-long plan is a gap. Success could prompt platforms like Snapchat to follow suit.

As Instagram rolls out this AI teen guard, the world observes. Will it set a safety standard or spark privacy battles? Early results will shape its path.

Share your thoughts below. For more tech insights, visit technocodex.com.