Key Google AI accessibility advancements for Android and Chrome have been unveiled for Global Accessibility Awareness Day (GAAD) 2025. A pivotal update is the integration of Gemini AI with Android’s TalkBack screen reader, enabling sophisticated image descriptions and interactive Q&A about visual content, alongside notable improvements to Chrome’s page zoom and Live Caption features.

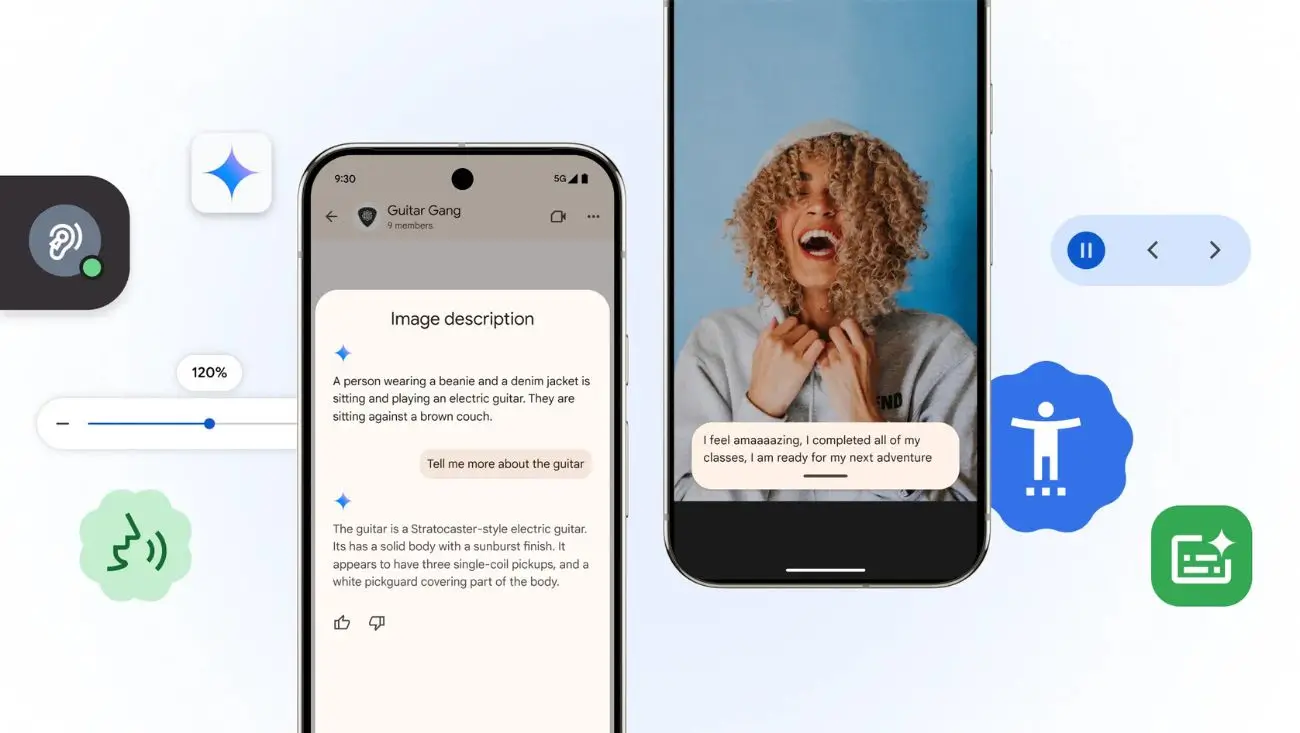

The new Google AI accessibility features for Android and Chrome, announced by Google on its official blog for Global Accessibility Awareness Day (GAAD) 2025, mark a significant step in assistive technology. Central to these enhancements is the incorporation of Google’s advanced Gemini AI model into TalkBack, Android’s native screen reader. This allows TalkBack to provide users with far more detailed and contextual descriptions of images and even to answer direct questions about visual elements within those images.

This integration of Gemini into TalkBack signifies a substantial leap from previous screen reader functionalities. Traditional screen readers heavily depended on often-missing alt-text for image descriptions. The new Google AI accessibility capability, however, allows TalkBack to utilize Gemini’s multimodal understanding for on-device image analysis. Consequently, users who are blind or have low vision can now access richer information about images on websites, within applications, or shared through messages. They can also interactively query TalkBack about specific image details, creating a more dynamic and informative experience. This mirrors ongoing efforts in the AI field to make interactions more intuitive, such as OpenAI’s iterative improvements to ChatGPT with models like GPT-4.1.

Technical Deep Dive: New Google AI Accessibility Features

Beyond the headline Gemini-TalkBack integration, the latest Google AI accessibility update encompasses several other technically significant improvements:

- Advanced Image Understanding in TalkBack with Gemini: This core Google AI accessibility feature enables TalkBack to generate rich descriptions for images lacking alt text. Users can pose follow-up questions (e.g., “Describe the style of clothing” or “What objects are in the background?”). This functionality leverages Gemini Nano for on-device processing when feasible, enhancing both privacy and responsiveness.

- Enhanced Page Zoom in Chrome for Android: Addressing feedback from users with low vision, Chrome for Android is introducing a more powerful and intelligent page zoom. It allows magnification up to 300% while adeptly maintaining the integrity of the page layout and ensuring text reflows correctly for readability. Users can also set a persistent default zoom level.

- Live Caption Accuracy and Customization: Google’s Live Caption feature, providing real-time transcriptions for any audio on Android devices, is being updated. These updates aim for improved accuracy in recognizing a broader spectrum of sounds and speech patterns, alongside offering users more granular customization options.

- Wear OS Accessibility Improvements: The Google AI accessibility enhancements also extend to Wear OS. This includes more accessible shortcuts to key tools via watch face complications and generally improved support for screen reader functionality on wearable devices.

These Google AI accessibility innovations are the culmination of extensive research in artificial intelligence and a steadfast commitment to inclusive product design. The strategic use of on-device AI, such as Gemini Nano for specific TalkBack features, is particularly noteworthy for its privacy benefits, as it allows for local processing of potentially sensitive visual data. This responsible approach to data handling is crucial as AI systems become more deeply integrated into personal devices and workflows, a concern relevant across all AI applications, including those being developed for complex scientific research by initiatives like Meta AI Science.

The technical hurdles in enabling an AI to accurately interpret and articulate the nuances of diverse visual content are considerable. By deploying Gemini’s sophisticated multimodal capabilities, Google is aiming to significantly reduce the information access gap for users with visual impairments, thereby fostering a more equitable digital environment. These advancements also benefit from, and contribute to, broader progress in computer vision and natural language processing. Of course, as AI tools become more powerful, vigilance against misuse, such as the generation of deepfakes and misinformation, remains a critical aspect of responsible AI stewardship.

Google’s approach of embedding these Google AI accessibility features directly into its widely adopted platforms, Android and Chrome, ensures their availability to a massive global user base without the need for separate installations. This mainstreaming strategy is vital for promoting widespread adoption and establishing inclusive design as a standard within the tech industry. Furthermore, by offering these advanced tools, Google empowers the broader developer community to create more accessible applications on its ecosystem. The company’s practice of sharing insights and tools often benefits the entire field, similar to how transparency from entities like OpenAI regarding AI model safety is welcomed by the tech community.

The GAAD 2025 announcements underscore Google’s persistent drive to innovate within the accessibility domain. While these new Google AI accessibility tools signify substantial progress, Google acknowledges that the endeavor to create a universally accessible digital world is ongoing. Future developments will likely concentrate on further refining AI model accuracy, expanding language support, and addressing an even broader spectrum of accessibility requirements. The overarching theme is leveraging AI to enhance human capabilities, a pursuit that, while promising, also brings complex challenges, as observed in debates surrounding AI’s role in creative sectors like film production.