A new cybersecurity threat has emerged as cybercriminals use fake AI video generation platforms to distribute Noodlophile Stealer malware, according to a Morphisec report. Promoted through deceptive Facebook ads, these fraudulent sites trick users into downloading malicious software that steals sensitive data, including browser credentials, cryptocurrency wallets, and personal information. As AI tools become increasingly popular, this campaign underscores the growing risks of cyber exploitation and the urgent need for enhanced digital safety measures.

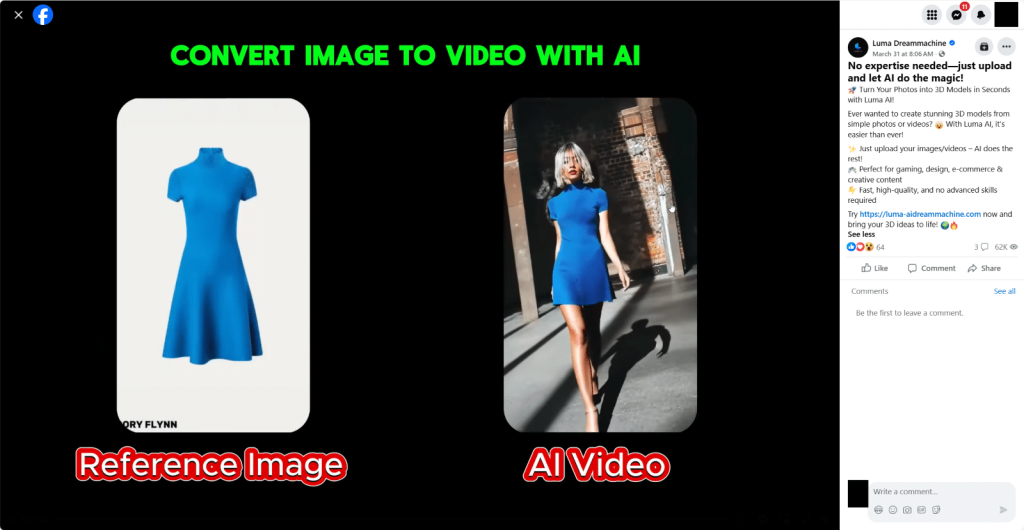

The Noodlophile Stealer campaign employs a cunning multi-stage attack strategy. Morphisec’s investigation found that attackers target users through Facebook groups, luring them with promises of free AI-generated videos. Victims are directed to fake websites that prompt them to upload images for video creation, only to be instructed to download a ZIP file disguised as a legitimate tool, often mimicking CapCut, a well-known video editing software. Once opened, the file unleashes Noodlophile Stealer, which extracts browser data like cookies, passwords, and credit card details from platforms such as Google Chrome, Microsoft Edge, and Mozilla Firefox. In some cases, it also deploys the XWorm loader, granting attackers remote access to the victim’s device. This tactic mirrors other AI-driven cyber threats, where malicious actors exploit trust in emerging technologies.

The scope of this campaign is concerning. Noodlophile Stealer, a previously undocumented malware in public trackers, targets a wide range of sensitive data, making it a significant threat to both individuals and businesses. The use of Facebook ads amplifies its reach, as these platforms inadvertently become a conduit for distributing malicious content to a broad audience. Cybersecurity News identified one such fake site, editproai[dot]pro, which poses as an AI-based editing tool called “EditPro” to deceive users. This exploitation of AI enthusiasm is similar to challenges seen in AI privacy scandals, where technology meant to enhance user experiences is weaponized against them.

The fake platforms are designed to appear legitimate, often featuring professional layouts and cookie banners to build trust. However, their malicious intent becomes evident once the payload is downloaded. This campaign preys on the growing interest in AI content creation, a trend that has also driven the development of AI communication tools aimed at improving user interactions. Unfortunately, it also creates opportunities for cybercriminals to target unsuspecting users, particularly those who may not recognize the signs of a scam, highlighting the need for greater awareness and education.

Protecting against Noodlophile Stealer requires proactive measures. Cybersecurity experts recommend avoiding downloads from unverified sources, especially those promoted through social media ads, and using trusted antivirus software to detect and block malicious files. Morphisec suggests employing tools with dynamic detection capabilities, such as Palo Alto Networks’ Cortex XDR, to stay ahead of evolving threats. However, the digital divide poses a significant challenge, as not all users have access to such tools or the knowledge to identify scams, a recurring issue in AI accessibility efforts. This gap underscores the need for broader digital literacy initiatives, similar to those seen in AI hardware discussions that aim to empower users with emerging technologies.

The rise of Noodlophile Stealer reflects the broader implications of AI’s rapid adoption. While AI offers immense potential for innovation, it also provides cybercriminals with new tools to exploit vulnerabilities. Social media platforms like Facebook must improve their ad vetting processes to curb the spread of such campaigns, and users must exercise caution when engaging with unfamiliar AI tools. This challenge is not unlike those faced in cybersecurity discussions, where balancing technological advancement with security remains a critical concern. As AI continues to evolve, the tech industry must prioritize user safety to prevent such threats from undermining the benefits of innovation.

The Noodlophile Stealer campaign serves as a stark reminder of the risks lurking behind the allure of AI technology. While fake AI video platforms may promise instant creativity, they can deliver devastating consequences for unsuspecting users. Addressing this threat will require a collective effort from tech companies, cybersecurity experts, and users themselves to foster a safer digital environment. What do you think about the growing risks of fake AI tools—how can we better protect ourselves online? Share your thoughts in the comments—we’d love to hear your perspective on this alarming trend.